The libsodium cryptographic library added support for WebAssembly back in 2017.

Similar to the Javascript version introduced 4 years before, WebAssembly is a first-class citizen.

This is an officially supported platform, that every single release is extensively being tested on, with high-level Javascript wrappers (libsodium.js).

In spite of some caveats due to the platform itself, cryptography in a web browser has many valid use cases. From end-to-end encryption to authentication protocols and offline apps, having a library that runs in a browser and is fully interoperable with its server-side counterpart has proven to be very useful.

Emscripten

All of this wouldn’t have been possible without Emscripten, an invaluable tool to produce Javascript, asm.js, and WebAssembly code out of LLVM byte code.

Libsodium has always been an odd beast for Emscripten, though. This is not an application being ported to the web. This is a library, designed to be used a standard Javascript module, similar to how people envision to use WebAssembly today.

Due to its nature, we hit some roadblocks. However, Emscripten authors and contributors, as well as libsodium.js users are amazing and helped overcome these, even when it required modifying Emscripten itself.

All the tests from the libsodium test suite can run in a web browser. That link also displays how long completing an individual test takes, so that WebAssembly implementations can be compared to each other.

Enter WASI

Now that LLVM has native support for WebAssembly and that the WASI interface has been proposed by Mozilla (and immediately got a lot of traction), it’s time to add a new officially supported platform to libsodium: wasm32-wasi.

This is not going to replace Emscripten builds, especially since WASI hasn’t been implemented in web browsers yet.

WebAssembly+WASI is designed to run server-side, or in desktop and mobile applications.

For applications running “at the edge” (/”serverless” /”lambda functions”), the WebAssembly+WASI couple is a blessing. Cloud providers can use them to safely run untrusted code, with low latency, at (as advertised) close-to-native speed.

As they typically need to do authentication, signing, verification, hashing and encryption, having a set of cryptographic primitives can be tremendously useful to these applications.

Which is why libsodium 1.0.18 and beyond will officially support WebAssembly+WASI as a platform. Optimized, pre-compiled builds will be available for download.

By the way, libhydrogen was also updated to seamlessly and securely run on WASI, and I am currently working on yet another cryptographic library, wasm-crypto, written from scratch in AssemblyScript, and specifically designed for WebAssembly.

WebAssembly+WASI builds are very similar to native builds. They use a similar toolchain, and WASI is no different than a standard C library. In fact, it is a standard C library (musl libc), with a thin layer to communicate with the WebAssembly runtime.

As libsodium is mostly compute-only, and performs virtually no heap memory allocations, that thin layer is rarely used.

In that context, the only difference between native builds and WebAssembly+WASI builds is the fact that in the latter, the code is compiled to WebAssembly before being eventually compiled to native code.

Comparing both from a performance perspective is thus a good way to measure the overhead of having an intermediary WebAssembly representation. The libsodium test suite is quite extensive and triggers many different code paths with different opportunities for optimization.

Compiling libsodium to WebAssembly+WASI

The wasm32-wasi.sh script was used to compile libsodium to the new target.

After trying -Os, -O2, -O3 and -Ofast, it turns out that -O2 is the optimization level that produces the overall fastest running functions.

Tests were run on macOS 10.14.4, LLVM 8.0.0 installed via Homebrew, with the latest (7e11511abdda) version of Wasmtime as a runtime, as well as the latest version of WASI (24792713d7e3).

Wasmer doesn’t seem to support WASI preopened descriptors on a non-virtual filesystem yet, and Lucet only runs on Linux.

Hence the choice of Wasmtime. However, since Lucet uses the same compiler backend as Wasmtime, results should be very similar.

Optimization passes happen at two different stages: when generating the WebAssembly code, and then when generating native code from WebAssembly. The former will vary according to the runtime.

For this reason, the benchmarks were run first without Cranelift optimizations, and then with optimizations (wasmtime -o). This is helpful to understand if the most effective optimizations come from the WebAssembly code generation or from the runtime.

Native builds were made using clang, without any special configuration. Compilation options such as stack overflow protection were not disabled. LTO was not enabled.

Library size

Something that struck me is the size of the final WebAssembly library: 278,330 bytes. Full build, uncompressed.

This is pretty damn small compared to the size of the same library compiled for macOS: 731,484 bytes. Stripped.

WebAssembly benchmarks vs native

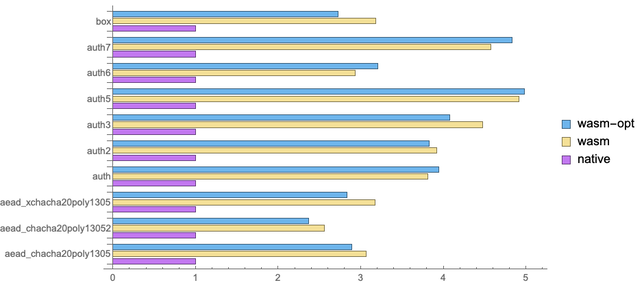

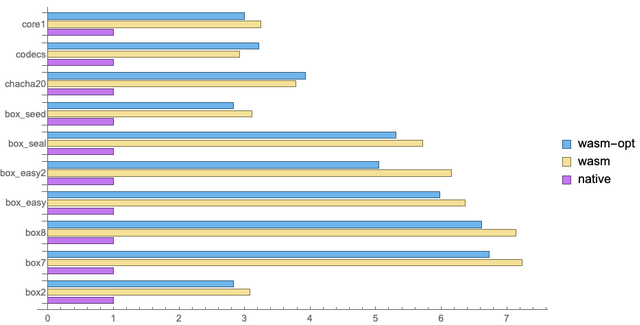

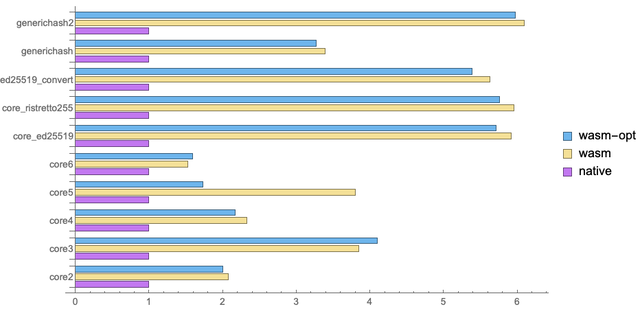

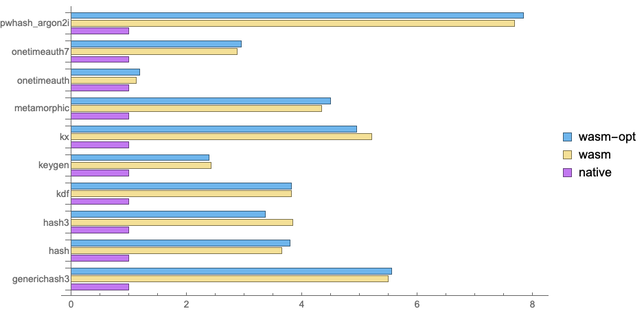

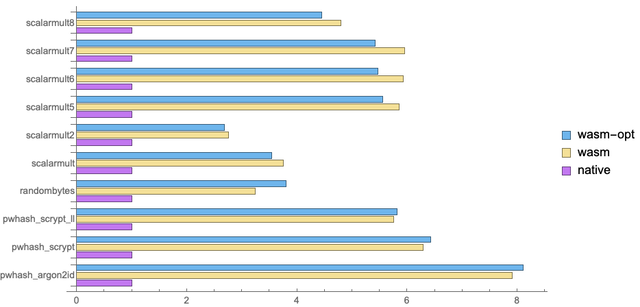

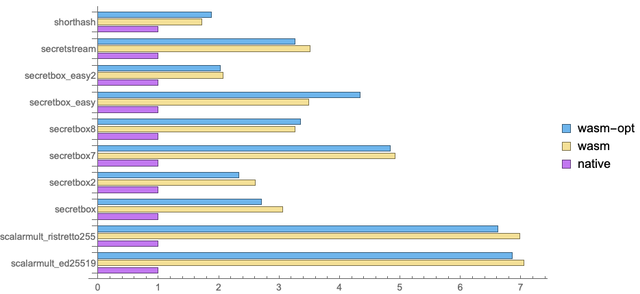

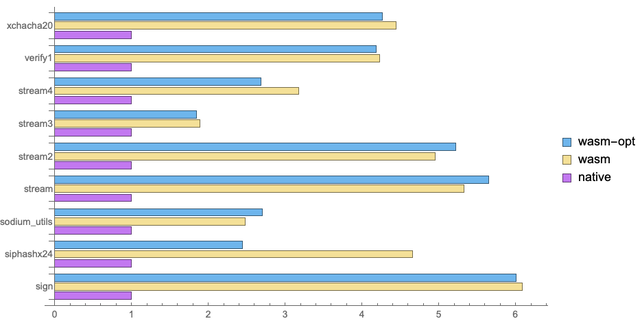

Since some tests can be significantly slower than others, results have been normalized.

This is a linear scale. The light blue and yellow bars represent the time taken to complete an individual test (over 100 iterations) compiled to WebAssembly, and run with Wasmtime, with the Cranelift backend. The compilation time is not included.

We can immediately see that the overhead of WebAssembly is far from negligible.

Computation-intensive tasks such as elliptic curve point multiplication get about 7 times slower, probably due to optimizations that couldn’t be achieved with WebAssembly. Note that the relevant code doesn’t use any assembly or SIMD instructions.

Password hashing is 8 times slower. This requires a ton of unpredictable, random memory accesses that don’t play well with WebAssembly.

An important point here is that the native code does use SIMD instructions (and on a more recent CPU than the one I have, the difference would have been even more significant). Whereas the WebAssembly backend doesn’t support these.

However, there are no reasons to remove existing optimizations from native code. It remains a fair comparison: what we want to measure is the overhead of WebAssembly over native code, not over native code modified to be slower than usual.

Libsodium can also benefit quite a bit from common functions being inlined. Maybe the extra WebAssembly step prevents some function inlining from being made.

On a surprising number of tests, Cranelift’s optimizations produced slightly slower code than with optimizations disabled. Maybe because unoptimized builds are producing smaller code that fits better in CPU caches.

The most effective optimizations happen during the first stage. And this is good news. Over time, WebAssembly runtimes will improve, and code will automatically get faster without developers having to make any changes to the modules they published.

Slower doesn’t mean slow

While the overhead of WebAssembly is significant, these figures should be relativized. The original, native code is extremely fast. Way faster than most applications need.

And even if WebAssembly could be faster, it is absolutely usable right now. Pure Javascript versions of libsodium are significantly slower, and have been successfully used by many applications. WebAssembly builds, both with the Emscripten and the WASI layers, are nothing but faster.

Beyond the fact that runtimes still have room for more optimizations, these results also suggest that it may be worth writing optimized path-critical WebAssembly code manually, or using different tools than a non-specialized compilation toolchain.